Keynote Title:

Multimodal LLMs as Social Media Analysis Engines

Abstract:

Recent research has offered insights into the extraordinary capabilities of Multimodal Large Multimodal Models (MLMMs) in various general vision and language tasks. There is growing interest in how MLMMs perform in more specialized domains. Social media content, inherently multimodal, blends text, images, videos, and sometimes audio. To effectively understand such content, models need to interpret the intricate interactions between these diverse communication modalities and their impact on the conveyed message. Understanding social multimedia content remains a challenging problem for contemporary machine learning frameworks. To evaluate MLLMs\' capabilities for social multimedia analysis, we select five representative tasks, including sentiment analysis, hate speech detection, fake news identification, demographic inference, and political ideology detection. Our investigation begins with a preliminary quantitative analysis for each task using existing benchmark datasets, followed by a careful review of the results and a selection of qualitative samples that illustrate GPT-4V\’s potential in understanding multimodal social media content. GPT-4V demonstrates remarkable efficacy in these tasks, showcasing strengths such as joint understanding of image-text pairs, contextual and cultural awareness, and extensive commonsense knowledge. In addition to the known hallucination problem, notable challenges remain as GPT-4V struggles with tasks involving multilingual social multimedia comprehension and has difficulties in generalizing to the latest trends in social media. We further present several attempts to improve the performance on some tasks. The insights gleaned from our findings underscore a promising future for MLMMs in enhancing our understanding of social media content and its users through the analysis of multimodal information.

Bio:

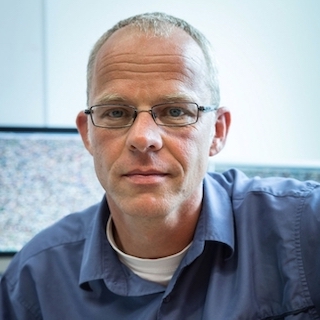

Jiebo Luo is the Albert Arendt Hopeman Professor of Engineering and Professor of Computer Science at the University of Rochester, which he joined in 2011 after a prolific career of fifteen years at Kodak Research Laboratories.

He has authored over 600 technical papers and holds over 90 U.S. patents. His research interests include computer vision, NLP, machine learning, data mining, computational social science, and digital health. He has been involved in numerous technical conferences, including serving as program co-chair of ACM Multimedia 2010, IEEE CVPR 2012, ACM ICMR 2016, and IEEE ICIP 2017, and general co-chair of ACM Multimedia 2018 and IEEE ICME 2024.

He has served on the editorial boards of the IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), IEEE Transactions on Multimedia (TMM), IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), IEEE Transactions on Big Data (TBD), ACM Transactions on Intelligent Systems and Technology (TIST), Pattern Recognition, Knowledge and Information Systems (KAIS), Machine Vision and Applications (MVA), and Intelligent Medicine. He was the Editor-in-Chief of the IEEE Transactions on Multimedia (2020-2022). Professor Luo is a Fellow of ACM, AAAI, IEEE, SPIE, and IAPR, as well as a Member of Academia Europaea and the US National Academy of Inventors (NAI). Professor Luo received the ACM SIGMM Technical Achievement Award in 2021 and the William H. Riker University Award for Excellence in Graduate Teaching in 2024.